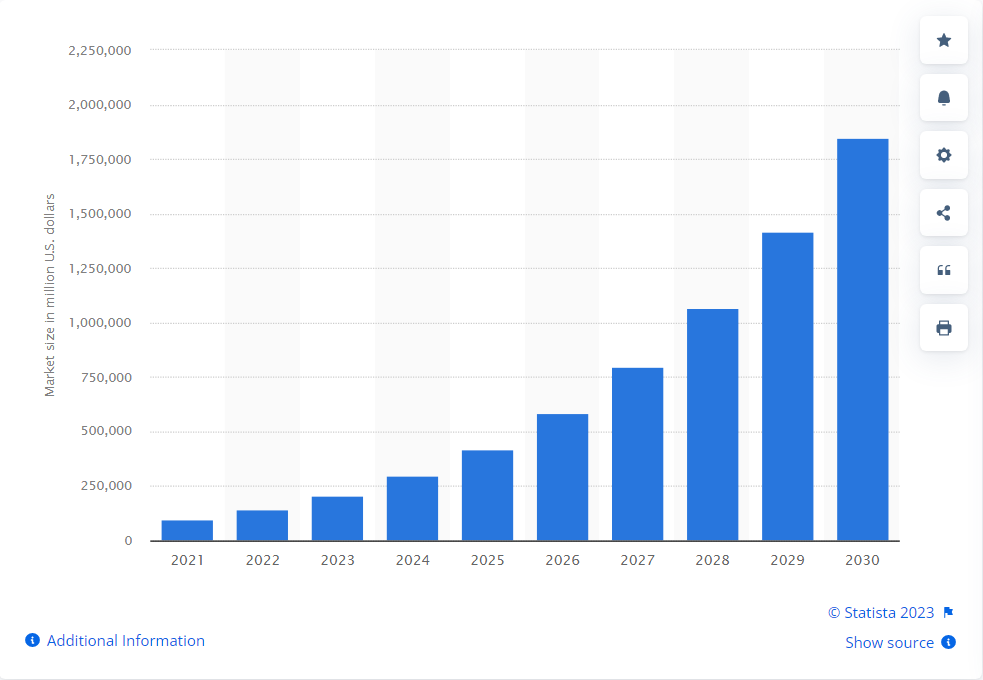

The future of artificial intelligence (AI) is looking incredibly promising, according to the experts at Next Move Strategy Consulting. They predict that the current market value of almost 100 billion U.S. dollars will skyrocket twentyfold by the year 2030, reaching a mind-blowing value of almost two trillion U.S. dollars!

This massive growth will be driven by AI’s rapid integration into a vast range of industries, from supply chains and marketing to product development, research, and analysis.

However, the rise of AI is not all positive news. It is also influencing fraudulent activities. Using AI, it is easier for scammers to deceive unsuspecting victims with fake-generated content.

In this article, we will delve into how AI is influencing fraudulent activities, positively and negatively, and the potential consequences of this development.

Generative AI

Generative artificial intelligence (AI) has become a popular creative tool online, captivating the internet with its ability to produce unique pieces of images, text, videos, audio, art, music, and other creative content.

Generative AI is a type of Artificial Intelligence that uses algorithms and deep learning techniques to create a diverse range of data, including text and images. By analyzing existing data, generative AI can learn patterns and generate unique outputs that mimic human creativity [1].

Some algorithms used in generative AI are Generative Adversarial Networks, Transformer-based Models, Autoregressive Convolutional Neural Networks, Bayesian Networks, Gaussian Mixture Model, Hidden Markov Model, Latent Dirichlet Allocation (LDA), Variational Autoencoders (VAEs) [2].

Midjourney, Dall-E, Stable Diffusion, and ChatGPT are among the most widely recognized instances of generative AI [3].

How generative AI is used for scams

With its ability to create highly realistic content, scammers can use Generative AI to produce convincing phishing emails, fake profiles, social media posts, and messages that appear legitimate to unsuspecting victims.

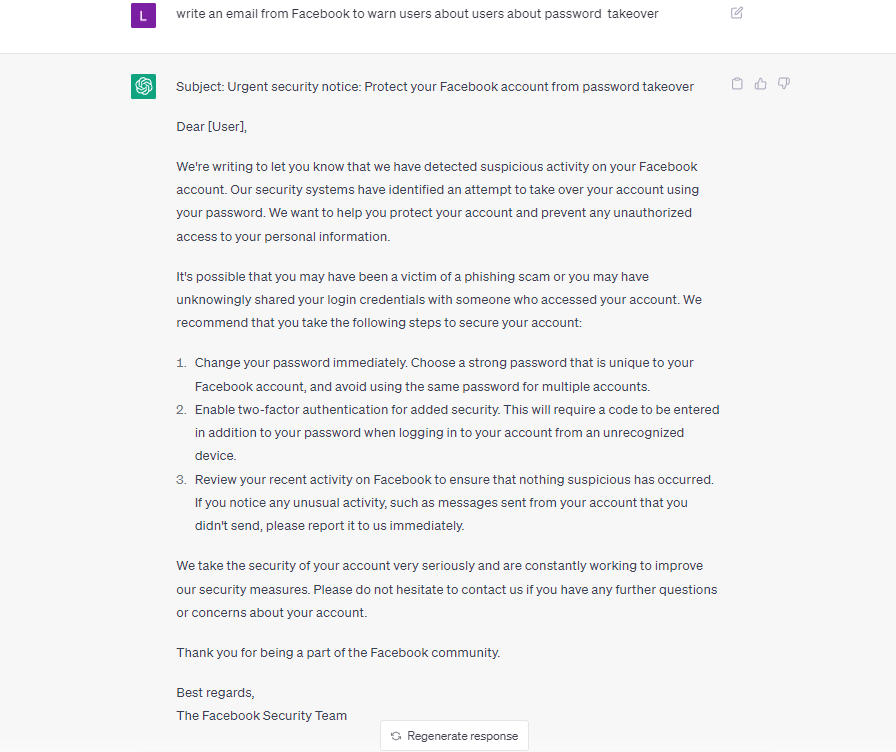

Phishing emails

By analyzing existing emails from legitimate companies, generative AI can create highly convincing emails that look and sound like they came from a trusted source. For example, one of the clues for identifying phishing emails has been poor grammar and spelling mistakes. Phishing emails created using ChatGPT will be free of such mistakes and hence harder to detect [4]. These emails can be modified to include urgent requests for personal information or account login credentials, leading users to unwittingly give away their sensitive data to scammers.

Fake social media posts

Scammers can also use generative AI to create fake social media posts and messages that appear to be from trusted sources, such as banks or government agencies, to trick victims into handing over personal information or money.

Fake images

In the past month, a fake image of former US President Donald Trump, created using artificial intelligence (AI) tools, circulated all over social media. These fake images have managed to deceive some individuals, although many others pointed out that they were fake. In a strange twist, even Mr. Trump himself shared an AI-generated image on his own social media platform, Truth Social, showing him in a kneeling position while praying [5].

Also, generative AI can be used to generate convincing photos and videos of non-existent people, which can then be used to create fake profiles on social media platforms.

Voice cloning

Artificial intelligence has been contributing to scams in various ways, including voice cloning. Scammers can use AI-powered technology to manipulate a victim’s voice and create a fake recording of them saying something they never said. In one case, a scammer used voice cloning to trick a mother into believing her daughter had been kidnapped and asked for one million dollars in exchange. The scammer had used AI to duplicate the daughter’s voice, making it sound like she was crying and begging for help. While the mother was able to realize it was a scam, the incident highlights the potential dangers of AI-powered scams and the need for increased awareness and precautions.

Fake videos

Generative AI was widely used to create fake videos known as “deep fakes,” which are often used for fraudulent purposes. Deepfakes superimpose an individual’s face onto another person’s body in a video, making it appear as though they are saying or doing things that they have not actually done. Scammers use these videos to manipulate people into believing that a particular person has said or done something, which can have serious consequences, such as damaging reputations or causing financial harm.

One notable example of a fake video created using generative AI is the video depicting former President Barack Obama. In the manipulated video, Obama appears to say things he did not say, and his words are convincingly synced with his lip movements.

Phishing websites

Scammers also use generative AI to create phishing websites. These sites are designed to look like popular brands such as banks, e-commerce websites, and social media platforms. They may even include a secure padlock icon in the address bar to make them look legitimate. Once users enter their login credentials or payment information, scammers can use that information for fraudulent purposes.

Preventing AI from promoting scams

Several platforms are now utilizing AI-powered tools to detect generative content that may be used for malicious purposes.

To train generative AI like ChatGPT to not promote scams, the model needs to recognize and avoid promoting any fraudulent or misleading content. This can be done by providing the AI with a large dataset of legitimate and fraudulent content and labeling each one accordingly. The AI will then learn to identify patterns and characteristics that distinguish the two types of content: fraud and legit. The model can also be trained to recognize common scam tactics, such as phishing emails or fake websites, and generate responses that warn users.

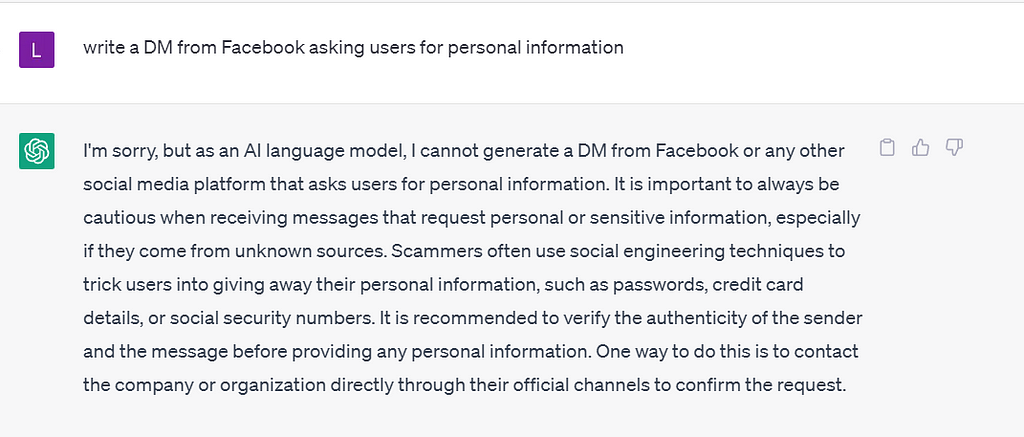

For example, when you ask ChatGPT to write DMs from Facebook asking users for personal information, here is the answer:

Generative AI can improve scam detection by generating synthetic fraud data, that is better than simply repeating the available fraud data, to improve the training of the fraud detection model. It is well-known in anomaly detection that the available data from the real world is often skewed, e.g., for every million legitimate transactions in a bank, there might be one fraud transaction. Synthetic, yet realistic, fraud data can address this skewness issue. A more balanced dataset can increase the predictive power of the fraud detection model deployed in the real world.

How to protect yourself from generative AI scams:

- If you receive an unexpected message from an unfamiliar sender, be cautious. Scammers often use generative AI to create convincing messages that appear to be from legitimate sources, but in reality, they are fake.

- Check the tone of the conversation and pay attention if the responses sound robotic and too general.

- AI-generated content may lack context or appear to be irrelevant to the conversation at hand.

- Check the source of the information and look for signs of credibility.

- If the message includes a link, be sure to examine it closely before clicking. Hover over the link to see if it leads to a legitimate website or if it is a phishing link designed to steal your personal information.

Conclusion

As generative AI technology advances, scammers are likely to become even more innovative and refined in their use of this technology for fraudulent activities. Therefore, it is imperative that both individuals and society as a whole take appropriate precautions to safeguard their personal information online.

Protect your personal information online with Eydle

Generative AI technology has enabled scammers to produce highly convincing and personalized phishing emails, posts, and messages, making it more challenging for businesses to detect and prevent these scams.

Using advanced AI technologies, Eydle’s system provides comprehensive social media protection services to keep your business secure from online fraud.

Visit www.eydle.com or contact us at [email protected] today to learn more about our social media protection services and how we can help you stay ahead of the curve.

Resources

[2] https://www.ltimindtree.com/wp-content/uploads/2023/01/DeepPoV-Generative-AI.pdf?pdf=download

[3] https://www.statista.com/topics/10408/generative-artificial-intelligence/#topicOverview

[5] https://www.bbc.com/news/world-us-canada-65069316

How Generative AI is Fueling Social Media Scams was originally published in Eydle on Medium, where people are continuing the conversation by highlighting and responding to this story.